SharedNeRF

Leveraging Photorealistic and View-dependent Rendering for Real-time and Remote Collaboration

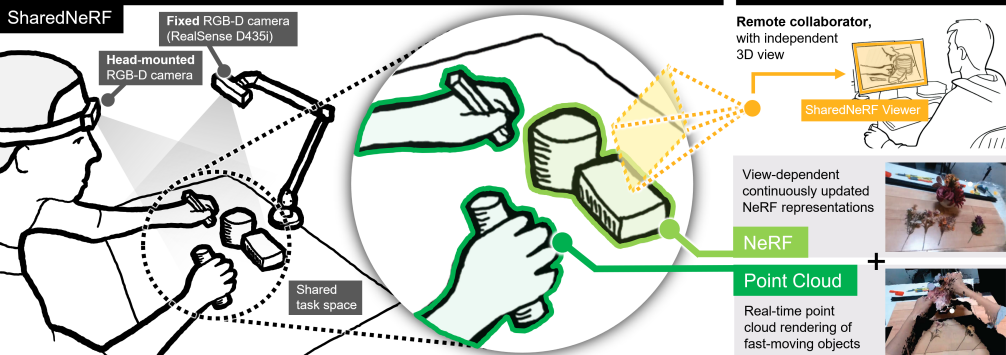

Abstract: Collaborating around physical objects necessitates examining different aspects of design or hardware in detail when reviewing or inspecting physical artifacts or prototypes. When collaborators are remote, coordinating the sharing of views of their physical environment becomes challenging. Video-conferencing tools often do not provide the desired viewpoints for a remote viewer. While RGB-D cameras offer 3D views, they lack the necessary fidelity. We introduce SharedNeRF, designed to enhance synchronous remote collaboration by leveraging the photorealistic and view-dependent nature of Neural Radiance Field (NeRF). The system complements the higher visual quality of the NeRF rendering with the instantaneity of a point cloud and combines them through carefully accommodating the dynamic elements within the shared space, such as hand gestures and moving objects. The system employs a head-mounted camera for data collection, creating a volumetric task space on the fly and updating it as the task space changes. In our preliminary study, participants successfully completed a flower arrangement task, benefiting from SharedNeRF’s ability to render the space in high fidelity from various viewpoints.

Honorable Mention Award at CHI 2024

ACM-DL: https://dl.acm.org/doi/10.1145/3613904.3642945

Project video